Organizations are generating an unprecedented volume of digital content to satisfy customer demand for exceptional experiences. This surge has led to a projected valuation of the content marketing industry at $600 billion, reflecting its significant role in engaging audiences and driving sales.

However, managing this vast array of digital assets—including images, videos, documents, and audio files—has become increasingly challenging. Enterprises now face the task of efficiently organizing, storing, and retrieving tens of thousands of digital assets, necessitating robust strategies to handle the complexities of modern content management.

Fortunately, advances in artificial intelligence (AI) are transforming how digital asset management software operates, making it more intelligent, automated, and user-friendly. By automating and improving metadata generation, providing additional context and detail, and enabling natural language processing to interpret complex search queries, AI can significantly increase the efficiency and accuracy of finding and managing assets within a DAM, saving teams valuable time and effort and accelerating time to market.

Content Discoverability With Smart Search

As the volume and variety of assets continuously increase, it’s no surprise most marketers are overwhelmed by the content management process. Though content is the primary way to engage buyers and customers, it remains trapped in silos, stored in various locations across numerous repositories, individual desktops, and even communication and collaboration tools like email and Slack. As a result, users either can’t find the assets they need, or it takes them far too much time and effort to find those assets.

Ensuring brand compliance and the appropriate legal use of assets also become huge challenges. All of this has a severe impact on operational efficiency and scalability, increasing costs, risk, and time to market, and degrading the user experience.

Teams need to break free of this content chaos; that’s where DAM software comes in. However, it’s important to understand that not all DAMs deliver the same level of AI-powered capabilities today’s modern business requires to fully embrace the promise of DAM.

For example, while some DAMs may offer Optical Character Recognition (OCR), the capability may not work for Word, PPT, PDFs, etc., which results in the DAM simply becoming a dumping ground vs. a strategic component of your content operations strategy.

Aprimo’s AI services extend beyond just tags and include descriptions, OCR, video and audio text track creation, similar item searches, facial recognition, and even the option to create customer-specific AI-based tagging models.

Aprimo’s AI-powered capabilities include:

- AI tagging models for images, video & 3D files

- AI-written descriptions

- Optical Character Recognition (OCR)

- Facial recognition search

- Speech2text & language detection

- Content recommendations

- Visually-similar searches

- Trained AI

- Automated inheritance

- Embedded data

According to a recent report by Fortune Business Insights, the global Digital Asset Management market is projected to grow from $4.59 billion in 2024 to $16.18 billion by 2032, driven by the increasing integration of AI and machine learning. These advancements are revolutionizing DAM systems with features like automated tagging, intelligent search, and content usage analytics, significantly reducing inefficiencies.

As marketers continue to manage vast content repositories, AI-enhanced DAM solutions are helping organizations streamline workflows, reduce content waste, and eliminate redundant efforts, paving the way for more effective and efficient content strategies.

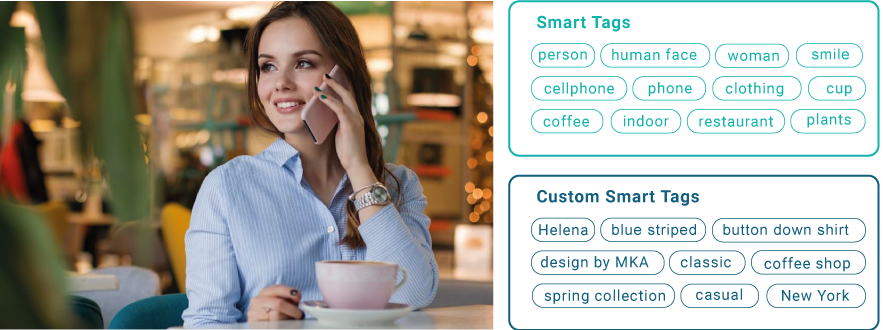

AI-Based Smart Tagging

Tagging based on Artificial Intelligence allows for assets to be tagged automatically when entering the DAM. This smart tagging service can take human work, human error, and human incompleteness out of adding metadata. Tagging assets or adding metadata to assets all serve the larger purpose of improving the searchability and findability of the assets in the DAM, therefore, stimulating asset reuse and increasing the ROI of the DAM.

Nowadays, most DAMs are equipped with smart tagging services, which are based on standard API services, such as Microsoft, Google, Amazon, Clarifai, and others. What most of these tagging services have in common is that they work on visual recognition, meaning they can describe an asset based on what you can ‘spy with your little eye.’

The standard vision APIs describe images or videos with a generic vocabulary, making them applicable to everyone. More advanced DAMs can also plug in learned AI, which is based on Machine Learned models specifically designed for you as a customer. Additionally, they can even teach the DAM system to use company- and business-specific vocabularies for tagging.

Let’s look at an example:

A (generic) smart tagging service will use generic tags to describe the image, such as: person, human face, smile, cellphone, phone, clothing, woman, indoor, tableware, girl, coffee, cup, beauty, photography, sitting, drink, vacation, drinking, restaurant, cup.

A trained smart tagging service can provide tags that are more specific to your line of business. For example, if you are in the business of selling jewelry, you would probably like tags specifying the exact watch or bracelet the woman is wearing in the picture. On the other hand, if you are a retailer, you would probably want the smart tagging to be able to identify that the woman is wearing a striped classic shirt and that the model is Helena.

Business-specific tagging models can be taught to identify brands, products, objects, and people, making them extremely valuable in automated metadata generation. To use a generic AI service, your effort should be low, and your expectation should be good enough. But when using a trained AI service, your effort should be high, and your expectation should be excellent.

Smart Tagging

- Uses generic AI services

- Generic keywords

- Same for all customers

- Included in DAM subscription

- Expectation: good enough

Custom Smart Tagging

- Uses learned AI service

- Custom keywords (your own tagging vocabulary)

- Specific to your Aprimo DAM

- Add-on to DAM subscription

- Expectation: Excellent

AI-powered smart tagging transforms content management by making asset organization faster, more accurate, and effortlessly scalable.

AI-Based Facial Analysis

Aprimo AI offers face detection technology to enable searching the DAM for similar faces.

As with most of the Aprimo AI features, you can control rules based on which imagery the face detection should work to avoid, running face IDs on content where it doesn’t make sense, or face IDs that shouldn’t be discoverable because of privacy reasons. It should be noted that the technology uses IDs and not names to make matches, so faces are kept anonymous.

If the service processes an image, a face ID will be created and stored for the asset. You can think of this as a kind of fingerprint of a face. This fingerprint is also stored in the face model – which is dedicated to your DAM tenant.

If there are more people in an image, multiple face IDs will be generated for each face and also for faces of people who are not completely front-facing. The face model is built and refreshed in a background process each time faces are added to the DAM.

When a user requests to see similar faces on an image, the search process will send the detected faces to the AI service to ask for similar faces and provide this back as a search result. If multiple people are in the image, you will first get all the images matching as many people as possible, and further down in the result set you will find the imagery matching only some or one of the faces.

AI-Powered Quality Control and Brand Consistency

Maintaining brand consistency across a growing volume of content is a challenge for modern organizations. AI-powered tools simplify this process by automatically detecting off-brand elements such as incorrect logos, mismatched colors, or inconsistent messaging. These technologies ensure that every piece of content aligns with established brand guidelines, reducing the risk of errors that could dilute your brand’s impact.

Aprimo’s automated compliance checks and brand safety features take this a step further by embedding brand standards directly into the content creation and review process. AI monitors for compliance in real time, flagging deviations before they reach the public, ensuring your brand stays cohesive and trustworthy.

At scale, this level of precision is invaluable. With AI ensuring quality control, teams can focus on creativity and strategy while maintaining the consistency and professionalism customers expect.

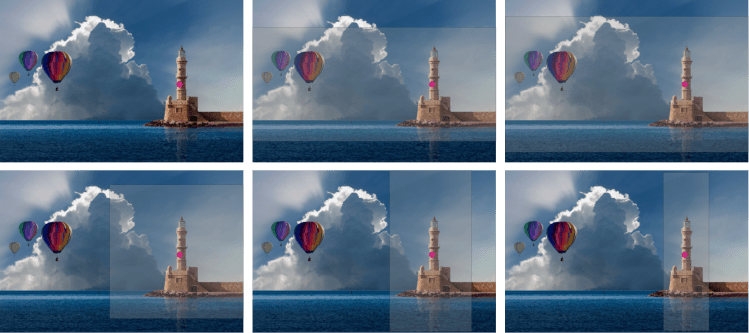

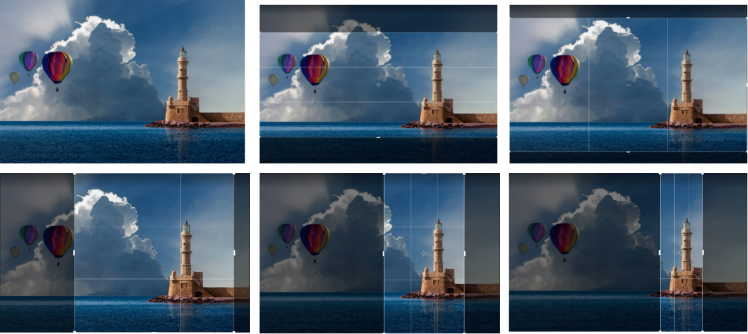

AI-Based Smart Cropping

The Smart Cropping feature in Aprimo DAM, powered by Aprimo’s AI, does not use focal point cropping. So, what’s the difference?

Aprimo’s Smart Cropping feature uses AI to determine where to crop based on the areas of interest it detects. Areas of interest can be based on objects and people. The cropping algorithm works not just with one focal point in the image, but with all the areas of interest it detects. Aprimo’s Smart Cropping service is designed to protect the image integrity, so it will protect as much of the image and its original proportions as it can.

For images that feature one dominant object or person, which is more or less in the center of the image, there will be little differences between focal point cropping and AI-based cropping. However, once objects are no longer in the center of the image, the AI-based cropping algorithms will be better at protecting the proportions of the original image.

Example image:

If a user sets a focal point, they would put it in the center of the lighthouse tower. You can see the pink dot on the picture where the user would likely set the focus point and make similar crops for both technologies below.

Focal point cropping:

AI-based cropping (note: the entire image not grayed out is the crop, not just inside the white lines):

Crops that are landscapes will come out looking very similar. There will be subtle differences in the proportion of sky and sea respectively above and under the lighthouse. With focal-based cropping, extra spacing will be divided equally from the focal point. With AI-based cropping, the proportions of the image will be respected, leaving less sea under the lighthouse than there is sky above it (compare top center images for both technologies).

More noticeable differences between the two technologies are visible in square and portrait crops. In focal point cropping, the lighthouse will be in the horizontal center (middle) of the cropping area, whereas with the AI-based crops the lighthouse will be slightly more to the right to respect the original proportion of the image.

Which Technology is Better?

Some pictures will lean more toward a focal point approach and some toward an AI-based approach. If technology makes a series of different crops on an image, there is a high probability that a user will have to finetune some crops in the series. It’s important to understand that as powerful as it is, technology will never get 100% of the crops right all the time.

You may want to check the crop before it gets published, which you can’t do with dynamically rendered crops. It is much less risky to make crop renditions in a controlled environment and then push them on a CDN.

When looking at cropping approaches, you should evaluate the efficiency gains you will get from the technology, not solely based on the cropping algorithm but also based on the cropping implementation. Below are several questions to illustrate this point.

- Is the technology relying on user action to crop? I.e., does a user need to define a focal point before cropping can start? AI-based cropping does not rely on a user to generate crops, and crops are pre-rendered based on content rules.

- How good is a user at setting a focal point? With focal point cropping the quality of the crop area depends on how good the user is at setting the focal point. With smart cropping, the quality of the cropping area is defined by the AI service and is therefore less impacted by human error.

- What percentage of the crops does the technology get right? Crops that don’t fully meet expectations have to be manually fine-tuned. How often does that happen and how easy is that? Even AI will not get 100% of the crops right; if 70-80% of the crops do not have to be finetuned, the performance gain is high. But it should also be easy for the user to correct the other 20%.

- How easy is it to identify which images need cropping applied? Aprimo DAM allows the user to apply AI contextually through rules. You can easily configure which content crops should be generated and in what content lifecycle phase this should happen. It makes no sense to render crops on content that is not mature enough (work-in-progress), or that may never need cropping applied, such as the headshots of your board members.

Bridging the Gap Between DAM and Content Operations

Modern organizations need more than just a repository for their assets—they require a seamless connection between asset management and broader content operations. AI-powered tools automate workflows, enabling smoother collaboration, and reducing manual effort across the entire content lifecycle.

Aprimo’s SMART DAM solution exemplifies this connectivity, offering integration capabilities with over 80 systems, from marketing platforms to collaboration tools. Assets flow effortlessly through creation, approval, distribution, and archiving, eliminating silos and improving efficiency.

By bridging the gap between DAM and content operations, AI enables teams to work smarter, ensuring that assets are accessible and aligned with the goals and timelines of larger campaigns. The result is a unified workflow that drives consistency, speed, and success at every stage of the content process.

Embrace the Future of Content Management with AI-Driven DAM Solutions

Smart Search, Smart Tagging, Facial Analysis, Brand Consistency, and Smart Cropping are just some of the ways AI already enhances digital asset management software usability in Aprimo, but we are already working towards more innovation in the platform using generative AI.

As our roadmap rolls out and as AI continues to evolve, we will be making more integrations publicly available very soon. This is why we believe it is so important to work under a set of principles that will guide us in our responsible use of AI in our content operations platform. Experience Aprimo’s cutting-edge features in action and discover how AI simplifies workflows, ensures brand consistency, and boosts efficiency. Request your personalized demo today! Have you heard about our new ChatGPT integration? Check it out here.